Tangible Computing: Data You Can Touch

What is Tangible Computing?

Tangible computing is a human-computer interaction paradigm where physical form is given to digital information. The idea is that the user interacts through a Tangible User Interface (TUI, previously known as Graspable User Interface), where representation functions simultaneously as control; input and output devices are combined. Tangible Computing was pioneered by the MIT Media Lab’s Tangible Media Group through its Tangible Bits vision:

We are designing “tangible user interfaces” which employ physical objects, surfaces, and spaces as tangible embodiments of digital information. These include foreground interactions with graspable objects and augmented surfaces, exploiting the human senses of touch and kinesthesia. We are also exploring background information displays which use “ambient media” — ambient light, sound, airflow, and water movement. Here, we seek to communicate digitally-mediated senses of activity and presence at the periphery of human awareness.

E. Hornecker expands this vision with his six characteristics of TUIs. In his PhD defence, Brygg Ullmer, an early pioneer of Tangible Computing, notes that as with architecture, a characteristic of TUIs is that they, by their nature as output and input devices are both aesthetic AND utilitarian; the two aspects cannot be separated.

Tangible computing forms a subset of the field of physical computing, which would also include interfaces where non-traditional physical input or output devices are used, but do not necessarily converge into a single input-plus-output affordance. Tangible computing has some small overlap with, but is largely distinct from, embodied computing, which concerns building robotic systems that can adapt to their environment by reconfiguring themselves.

For next week’s class, I have been asked to select possible speakers for the “Awesome New Interfaces” track of the fictitious conference “Tangible Computing: The Past, Present and Future”. My three candidates are as follows:

1) IrukaTact: The power of sonar at your fingertips, literally

IrukaTact (paper) is a system developed at EMP Tsukuba University in Japan, which, through haptic feedback, enables divers to “feel” the results of a sonar echolocation ping. It is inspired by dolphins’ ability to echolocate and could be useful in disaster relief scenarios such as quickly finding submerged vehicles or homes in murky floodwater. I think this work-in-progress project is particularly interesting as it ties into the whole Human 2.0 concept of augmenting human capability and giving us additional senses. I also think it can be extended to other environments, not just underwater – in fact it is similar Professor Kevin Warwick’s experiments in giving himself an additional sixth sense via a chip in his arm.

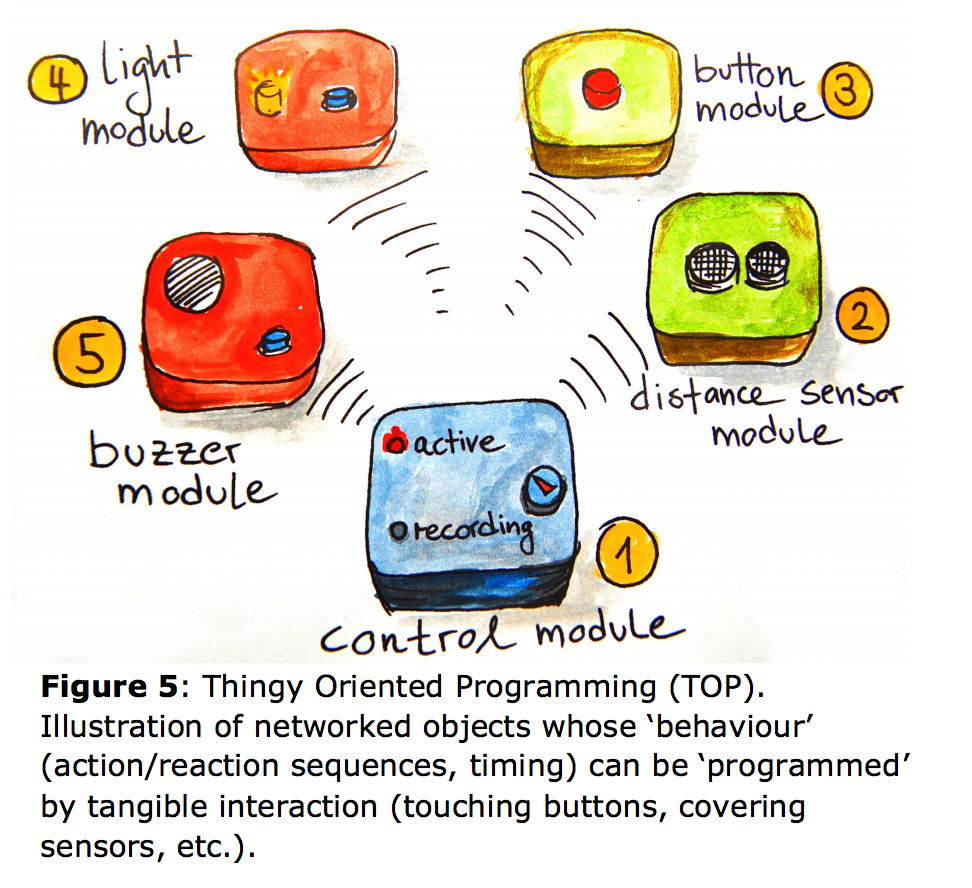

2) Thingy Oriented Programming: Bringing Gesture Control to Your Home

My second candidate selection is a work-in-progress project by Güldenpfennig et al at the Vienna Institute of Technology, known as Thingy Oriented Programming. The idea here (which has been developed in early prototype form) is to use a network of small, wirelessly-connected sensors, actuators and output devices spread around a home or environment, to “program” a physical space. Not only would this allow users to move computing functions out of their laptops, monitors and keyboards, but it would also bring the concept of “macro recording” into the physical world, so that you can record a gesture in physical space in much the same way you can program browser mouse gestures today. I think this is a particularly exciting prospect, as it is a direct embodiment of the ubicomp idea of “programming environments” suggested by Gregory Abowd in his “What’s Next, Ubicomp?” paper – which I wrote about in “The Future of Computing is Programming for Individuals in their Environments“. It’s also a real world, human-centric application of the “Internet of Things”. The project team are carrying out some interesting explorations of how this technology might be applied in different environments including smart homes and science classrooms.

3) Relief: Malleable 3D models you can squish, pan, and zoom

My third candidate is Relief [paper 1, paper 2], another MIT Media Lab project which combines gestural control in 3D space with a tactile, malleable 3D model. This is demonstrated through a Google Earth-like application that lets you pan and zoom over a landscape using gestures, and watch a 3D relief map of that landscape be built as you move around. The relief map is also malleable and re-shapeable, so doubles as an input device. This interface is therefore particularly exciting because it offers two forms of input – gesture and touch – and two forms of output – a 2D projection and a 3D model. This is an extremely powerful combination which can surely be applied to many other areas.

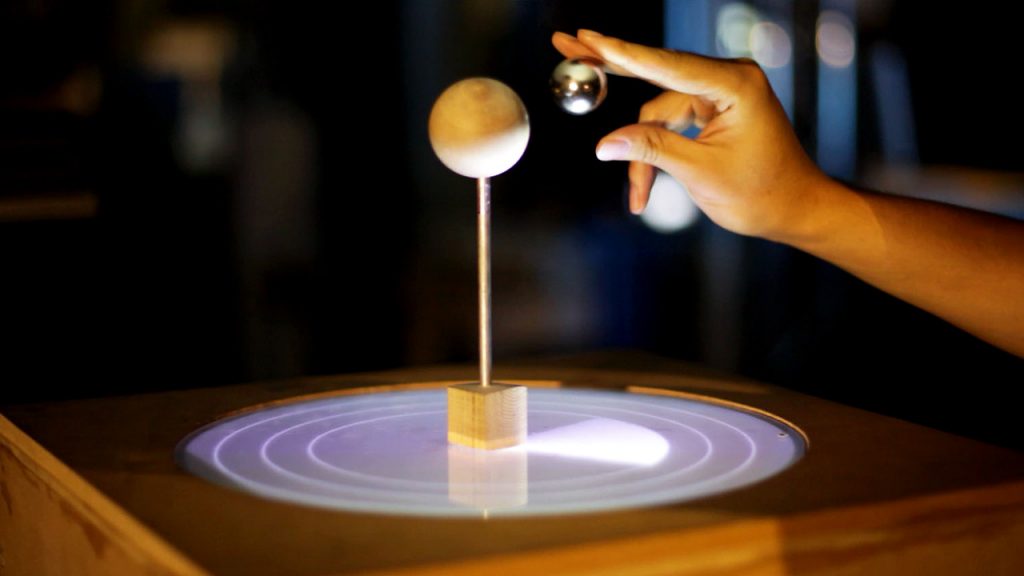

Bonus selection: ZeroN – Control through magnetic levitation

While looking for an interesting image to accompany this post, I came across the ZeroN project, an image of which is shown at the top of this post. It uses magnetic levitation to suspend a ball in 3D space, where it can be used as a representation or input device, including through the generation of shadows on the tabletop below. You can watch the video above or read more about it here.

Leave a Reply Cancel reply